We don't do small

Serverless-like experience, limitless capacity, ready for the world’s largest models.

SLA-driven Orchestration

Inference behaves differently for every AI application. Impala automatically adapts infrastructure to each workload’s unique compute and memory patterns

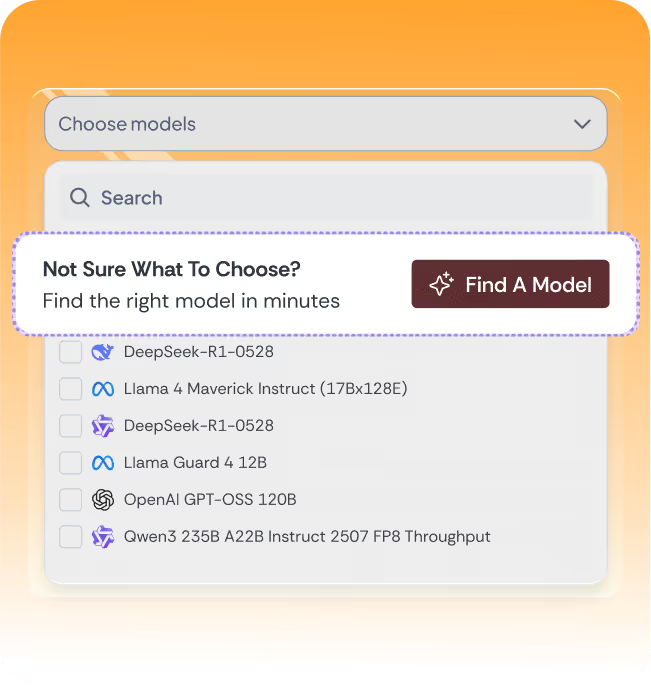

Serverless-like simplicity while choosing any model.

Run the latest models instantly, without managing infrastructure. Dedicated endpoints, deployed on your cloud

Disaggregated Serving

Compute Fabric is the invisible layer that stitches models and machines together. Any hardware, any workload- unified, abstracted, scaled.

Unparalleled Performance

Fully managed Inference Solution

You define what needs to happen. We make it happen

Your AI operations, on autopilot.

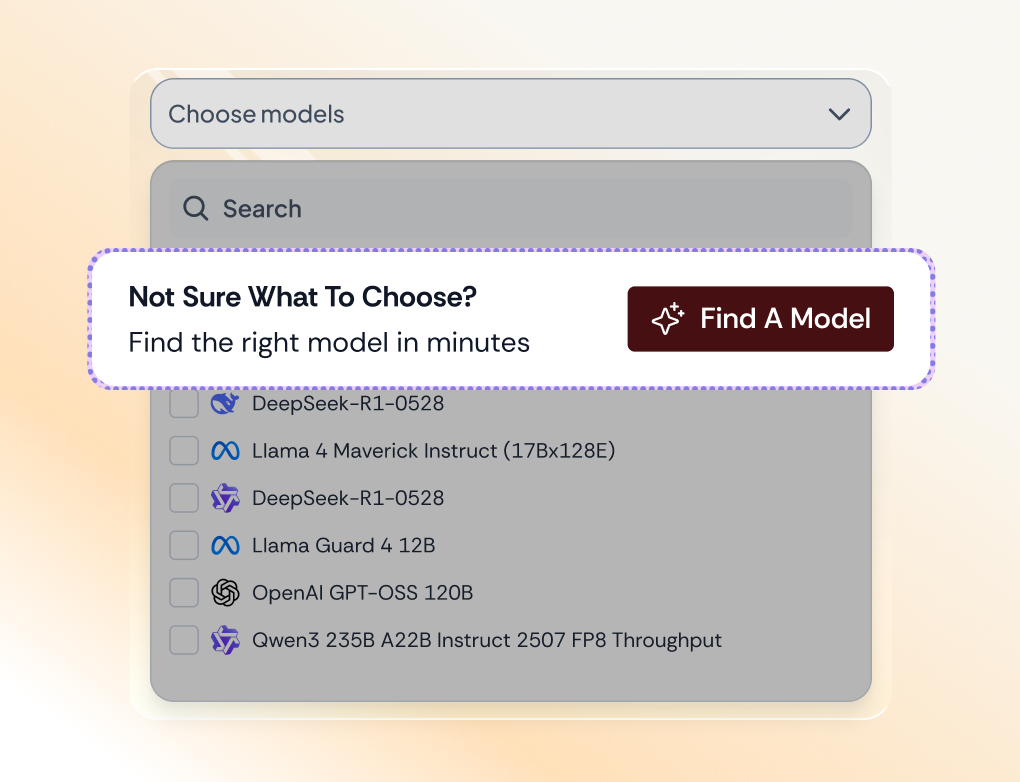

Impala’s Control Plane brings visibility and automation to your inference workloads. Track performance, manage costs, and choose the right models - all without the operational overhead.

Purpose built for data processing

Answers to all your questions, quickly and clearly

Impala is built for throughput-first inference, not chat-speed latency. We support three broad profiles:

- Near real-time (10 sec–few min) – streaming or event-driven use cases like document enrichment, or auto-responses.

- Async (~1 hr) – workflow or scheduled jobs such as summarization, content generation, or data classification.

- Batch (multi-hour to large-scale) – Image/ Video understanding, labeling, or synthetic data generation.

Yes. Because Impala runs in your account, usage bills against your existing agreements and credits.

We’re a fully-managed, distributed LLM-inference platform optimized for massive batch/async jobs. While other stacks prioritize p95 latency; we optimize tokens / $ at scale.

Our system hunts capacity cross cloud/region and auto-scales to your SLOs via real-time inventory search and orchestration- no idle clusters, no pre-warming, no manual tuning.